The Rise of Chiplet GPUs: What It Means for the Future of Gaming Graphics

For decades, GPUs have been designed as monolithic chips. Every core, memory controller, and cache block was etched onto one massive piece of silicon. This design worked, but it hit limits in recent years. Modern GPUs are so large and complex that yields (the number of usable chips from a silicon wafer) began to suffer. That meant higher costs for manufacturers and higher prices for gamers.

Enter the chiplet revolution. Inspired by what AMD achieved with its Ryzen CPUs, chiplet-based GPUs are now becoming a reality, starting with AMD’s RDNA 3 and soon expanding further across the industry. Nvidia is rumored to be exploring chiplet designs for future GeForce cards as well.

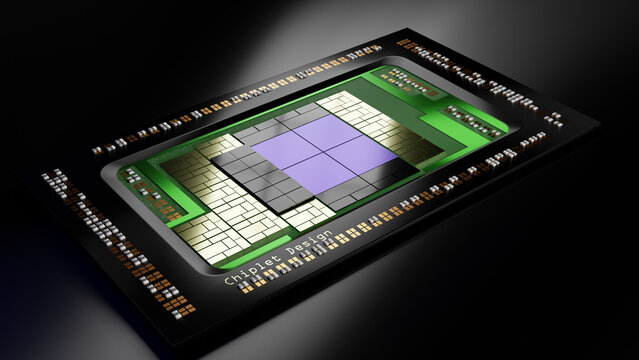

What Are Chiplet GPUs?

Instead of building one giant GPU, manufacturers break the design into multiple smaller dies called chiplets. These chiplets are then connected using a high-speed interconnect.

For example:

- One chiplet might handle the compute units (shader cores, RT cores, AI accelerators).

- Another chiplet could manage memory controllers and cache.

- Future designs could even allow mixing different chiplets optimized for specific tasks.

This modular approach makes production more efficient. Smaller dies are easier to manufacture, have higher yields, and reduce waste. It also allows scaling: need more performance? Add more chiplets.

The Benefits for Gamers

- Lower Prices (Eventually)

- Manufacturing efficiency could bring down GPU costs in the long term. Early implementations have not been much cheaper, but as designs mature, the savings will matter.

- Better Performance Scaling

- Multi-chip GPUs can pack more compute resources than a single monolithic die could reasonably handle. That opens the door for future flagship cards to grow in performance without ballooning into unmanageable sizes.

- More Flexibility

- Chiplet designs could allow manufacturers to create a wider range of products more easily. Instead of designing a completely new GPU for every tier, they could scale performance by mixing and matching chiplets.

- Improved Efficiency

- Separating functions into dedicated chiplets allows fine-tuning for power efficiency. For example, AMD’s use of a separate memory/cache die in RDNA 3 improves bandwidth without massively increasing power draw.

The Challenges

Of course, chiplet GPUs are not a free win.

- Latency: Splitting the GPU introduces communication delays between chiplets. High-speed interconnects help, but it is still slower than everything being on one die.

- Software Optimization: Drivers and game engines must learn how to handle the more complex hardware layout. This can take time to mature.

- Early Cost Issues: Ironically, the first chiplet GPUs are not much cheaper because of the complexity of packaging multiple dies together. The long-term promise is savings, but we are not fully there yet.

Nvidia’s Chiplet Future and NVLink

While AMD has been the first to launch chiplet-based GPUs, Nvidia is not sitting still. One of Nvidia’s secret weapons is its NVLink interconnect, a technology originally designed for data centers and high-performance computing. NVLink offers far higher bandwidth and lower latency than PCIe, enabling GPUs to share data much more efficiently.

This is where Nvidia’s 2020 acquisition of Mellanox comes into play. Mellanox specialized in ultra-low latency networking and high-bandwidth interconnects for data centers. By integrating Mellanox’s expertise, Nvidia has built a strong foundation for scaling chiplet designs far beyond what AMD has currently implemented.

Future Nvidia GPUs could use an evolved form of NVLink to tie together multiple chiplets so seamlessly that the hardware looks and behaves like one giant GPU to software. This would reduce the traditional latency penalty of multi-die designs and allow Nvidia to scale performance to unprecedented levels.

For gamers, that could mean:

- Flagship GeForce cards with massive multi-chip architectures delivering performance leaps beyond monolithic designs.

- Consistent frame pacing, even in high-resolution, ray-traced workloads, because interconnect bottlenecks are minimized.

- Potentially more headroom for advanced features like AI-driven rendering, path tracing, and ultra-high-refresh gaming.

If Nvidia executes this correctly, their chiplet GPUs could leapfrog AMD’s early designs by leveraging NVLink and Mellanox’s networking heritage to build faster, lower-latency multi-chip systems.

What the Future Looks Like

Looking forward, chiplet GPUs could completely change how graphics hardware is built. Here are some possibilities:

- Hybrid Chiplets: Mixing different chiplets specialized for ray tracing, AI upscaling, or rasterization.

- Scalable Designs: Imagine a GPU that can scale from a midrange card to a flagship just by adding more compute chiplets.

- Cross-Vendor Trends: Nvidia is likely to deploy NVLink-based chiplets in future architectures, while AMD continues to refine RDNA’s modular approach.

- Integration with CPUs: As interconnects get faster, we might see CPU and GPU chiplets on the same package for ultra-low-latency systems.

Q&A: Chiplet GPUs in 2025 and Beyond

Will Nvidia use chiplets in future GPUs?

Yes. While AMD has already launched chiplet GPUs, Nvidia is expected to introduce its own designs in the near future. Nvidia’s advantage lies in NVLink and its Mellanox acquisition, which give it a powerful foundation for connecting chiplets with higher bandwidth and lower latency than traditional interconnects.

Why are chiplet GPUs important?

Chiplet GPUs solve major problems with manufacturing large monolithic dies. They improve yields, allow scaling by adding more chiplets, and could reduce costs over time. For gamers, this means better performance and efficiency in future graphics cards.

What is NVLink and why does it matter for gaming GPUs?

NVLink is a high-bandwidth interconnect originally designed for data centers. In gaming GPUs, it could allow Nvidia to connect multiple chiplets so efficiently that the GPU behaves like a single unified chip. This would minimize latency issues that often plague multi-die designs.

Will chiplet GPUs be cheaper than monolithic GPUs?

Not immediately. The first chiplet GPUs may be as expensive, or even more costly, due to the complexity of packaging multiple dies. Over time, however, better yields and more efficient manufacturing should bring costs down.

What will future GPUs look like?

Future GPUs may use hybrid chiplets, with some optimized for rasterization, others for ray tracing, and others for AI rendering. Nvidia and AMD are also exploring ways to integrate GPUs more closely with CPUs, possibly even on the same package.

Final Thoughts

Chiplet GPUs are not just a gimmick. They represent a fundamental shift in how graphics hardware is built, much like chiplets did for CPUs a few years ago. While the first generations have trade-offs, the long-term potential is massive.

Nvidia in particular could redefine this space by using NVLink and Mellanox’s expertise to build chiplet GPUs with lower latency and higher bandwidth than anything currently available. For gamers, this could mean not just more powerful cards, but a smoother and more consistent experience in future games.

We are still in the early days of this technology, but one thing is clear. The future of GPUs will not be defined by single massive chips. Instead, it will be about how well manufacturers can build and connect smaller, smarter pieces.

Tarl @ Gamertech